How do users access and learn API technology?

Method: Eye Tracking, Retrospective Think Aloud

Timeline: 14 Weeks

Category: Research

Abstract

Cooper Hewitt recently launched a new documentation website for their API. We were tasked with uncovering insights about the new platform’s learnability for both novice and advanced users, and identifying appropriate inpoints to the documentation from the museum’s main homepage. Utilizing eye tracking technology and retrospective think aloud methods, we found that while the site was well designed and followed documentation standard practices, prioritizing exemplary content and adding a documentation panel to the code editor would better support and encourage prospective users.

My Role

During the course of this project, I was responsible for taking point in client communication, moderating eye tracking sessions, analyzing test data, developing recommendations and accompanying mockups based on that data, and creating a slide deck report to present our team’s findings.

The Report

Click here for the full report

A Broad Scope and Two User Groups

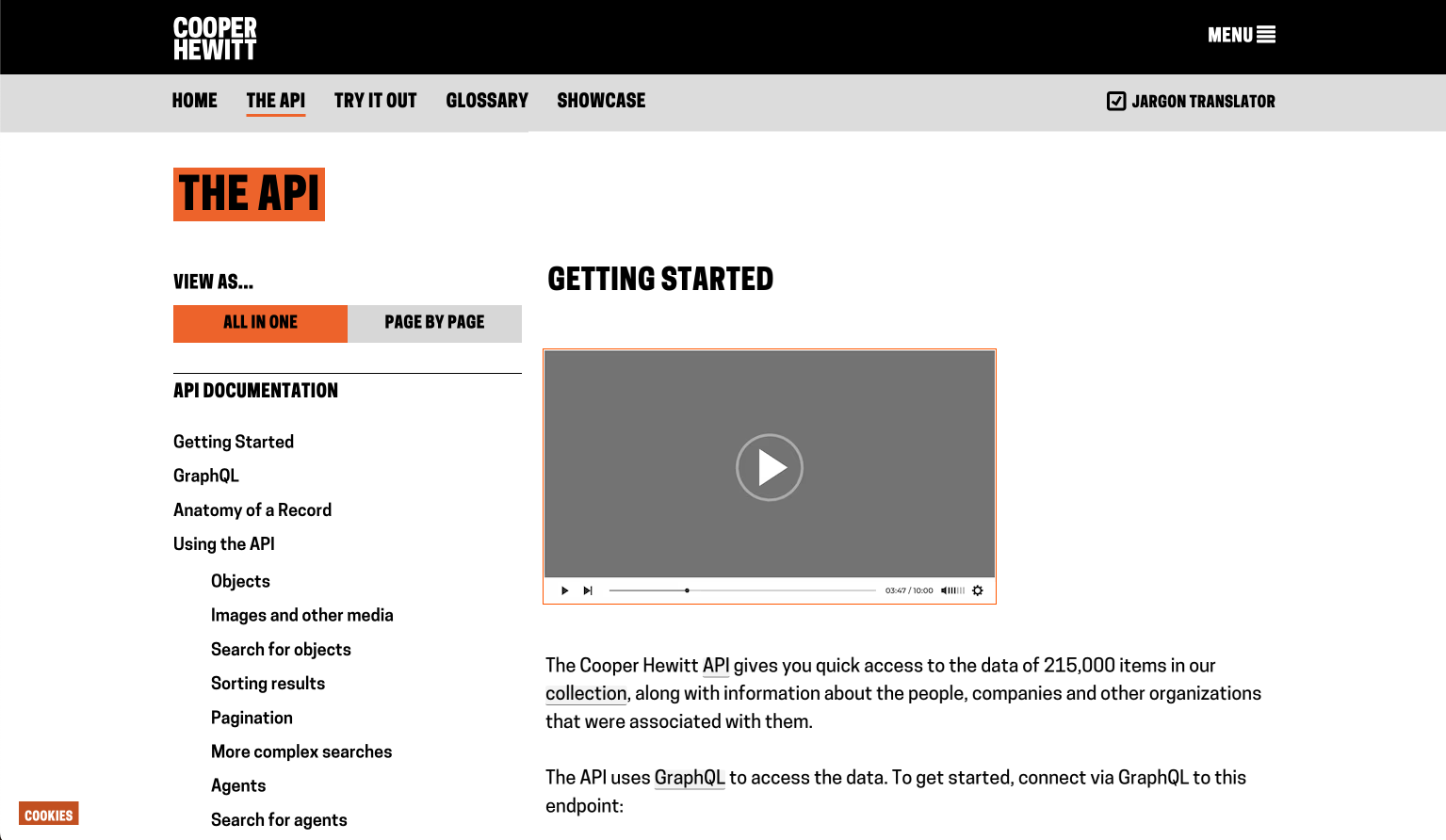

After our initial meeting with the Cooper Hewitt Museum, we were able to identify three key aspects of their new API documentation for evaluation: its discoverability, readability, and learnability. Another significant factor to consider was that our client wanted to develop this assessment from the perspective of two distinct user groups: novice users and advanced users.

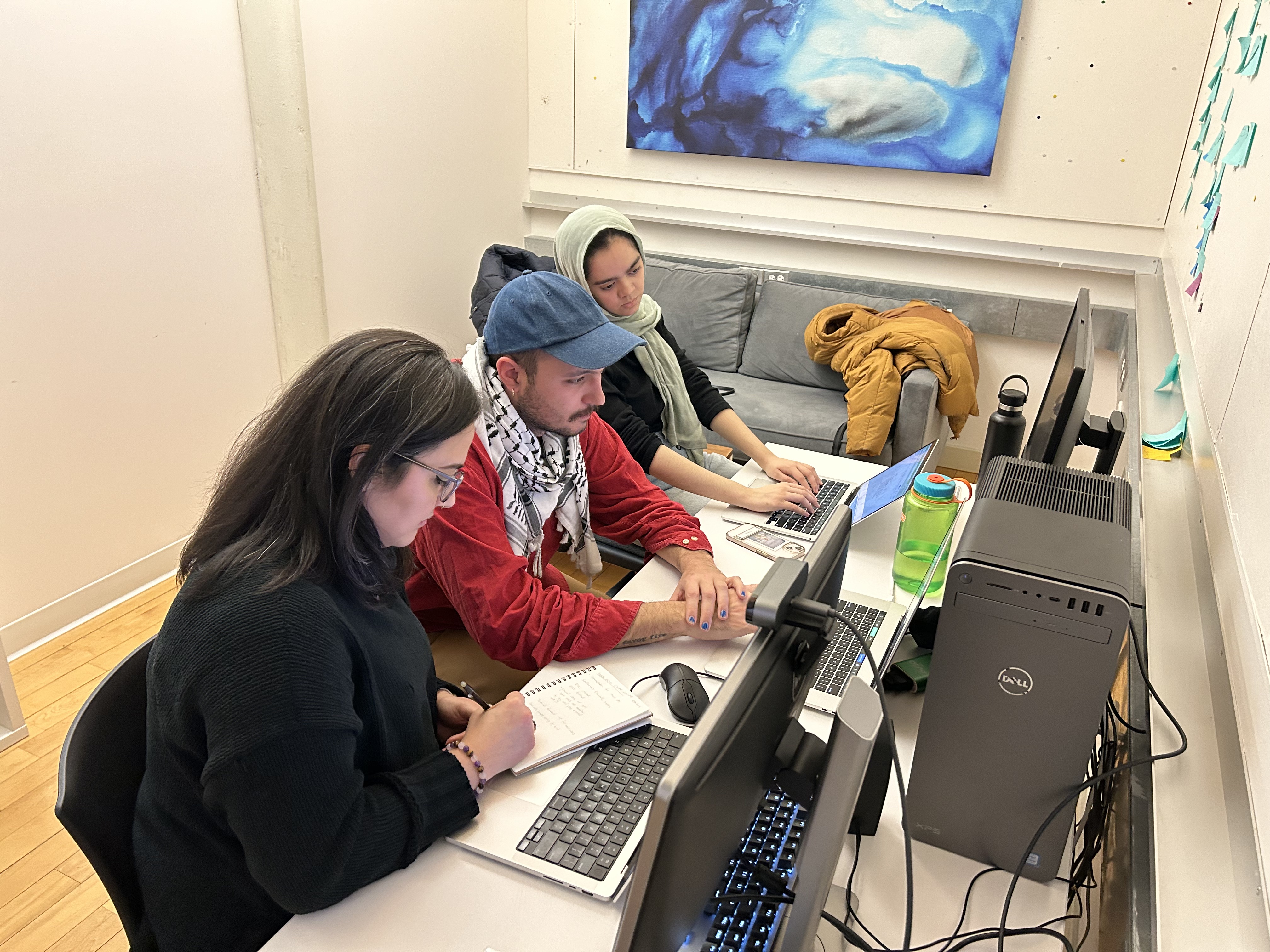

Above image: Our initial client kickoff meeting

Above image: Our initial client kickoff meetingThis presented a unique challenge. With a budget that allowed for only six participants, we aimed to find at least three participants for each desired user group.

Designing the Study

Presented with such a broad scope, our first priority was to design a study that was equal parts exploratory and precise.

First we broke down what exactly users needed to learn, and how they would go about learning it. We began by conducting research on how other cultural institutions were presenting their API documentation, and comparing it with Cooper Hewitt. Competitor’s analyzed included: the Art Institute of Chicago, the Rijkssmuseum, and the Metropolitan Museum of Art. We found that Cooper was doing a solid job following the standard practices of other API sites, so we moved on to defining the tasks for our study.

Task 1: Find the API documentation from the home page.

One of the requests from our client was to find an appropriate inpoint from the main site. We could accomplish this by simply asking users to look for the page from the existing information architecture of the homepage.

Task 2: Find an example of how the Cooper Hewitt API has been used in other projects and explore.

As part of the desk research we conducted upfront, we found that examples and visual aids played a big role in helping users understand API documentation. In tasking users to find examples within the documentation, we wanted to gain insight into the potential gaps for users when trying to understand the realized utility of the API.

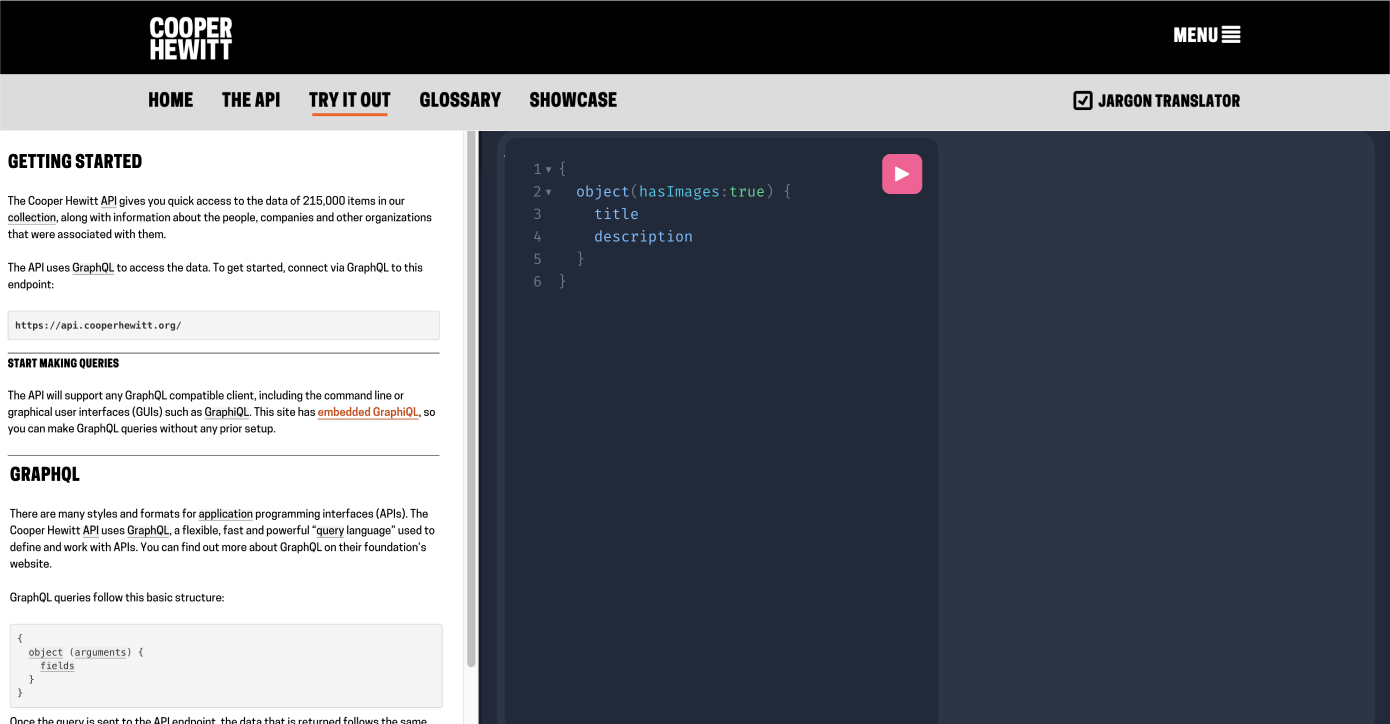

Task 3: Consult the API documentation and run a query. Now modify it!

The built-in code editor was a primary feature that gave us an opportunity to directly correlate participants’ learning efforts with their information retention. Our hypothesis was that based on their reading of the accompanying documentation, users would be able to run and understand queries in the graphql editor.

Recruiting Participants

Our next challenge was finding pariticpants that matched our target users. To accomplish this, we first needed to define what qualified a novice user and an advanced user.

We drafted a recruitment survey that collected information on the number of years of spent professionally working with APIs, and then asked particiapants to rate their own understanding of API technology on a scale of 1 to 10. Folks who had less than 5 years’ exposure to APIs or rated their understanding from 1 to 5 were grouped as novices, and those who had greater than 5 years’ experience or rated their understanding between 5 and 10 were grouped as advanced users.

Above image: slide 35 of our findings report

Above image: slide 35 of our findings reportIn hindsight, it would have been worthwhile to spend more time recruiting advanced users. The self reported experience left too much room for subjective opinions of ability, and having a clearer benchmark might have given clearer data.

Post Test Questionnaire

To measure success in users’ learning experience, we initially planned on presenting participants with a “knowledge quiz” that would ask questions about the API and how it works. After considering time restraints, and the cognitive load of something like that, we decided we did not have the appropriate resources to facilitate such a test.

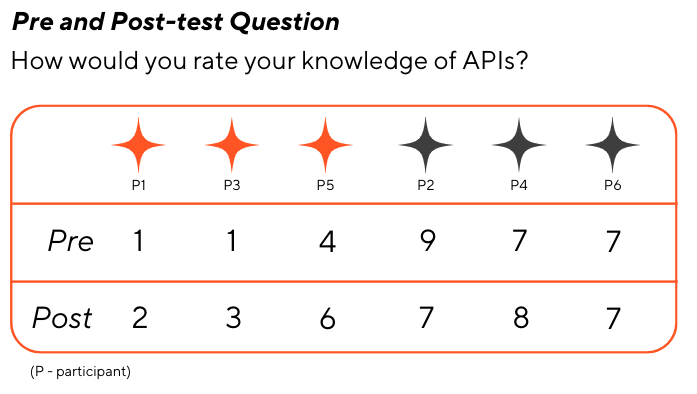

In the end, we added the same question from the recruitment form that asked users to report their own API knowledge to the post test questionnaire:

Above image: slide 13 of our findings report

Above image: slide 13 of our findings report

The comparison of the two answers showed that users had a an averaged 14% increase in their self-percieved knowledge after using the site.

What We Found

Each moderated session consisted of 20 minutes of eye tracking and 20 minutes of follow up interviews. Each session yielded varying results. Due to the exploratory nature of our research goals, we found very few consistent trends as far as quantitative user behavior. We were, however, able to identify common issues that users faced when engaging with the platform.

The bulk of our analyis consisted of pouring over the recordings of our sessions and pulling quotes when users called out specific gaps in their experience or components they would have wanted to help ease their experience.

After compiling the quotes and distilling associated problems into a problem sheet, we went through each problem and assigned a severity rating on a scale of 1 to 4. 1 would identify the least severe, and 4 the most.

Above image: problem sheet analysis

Above image: problem sheet analysisRecommendations

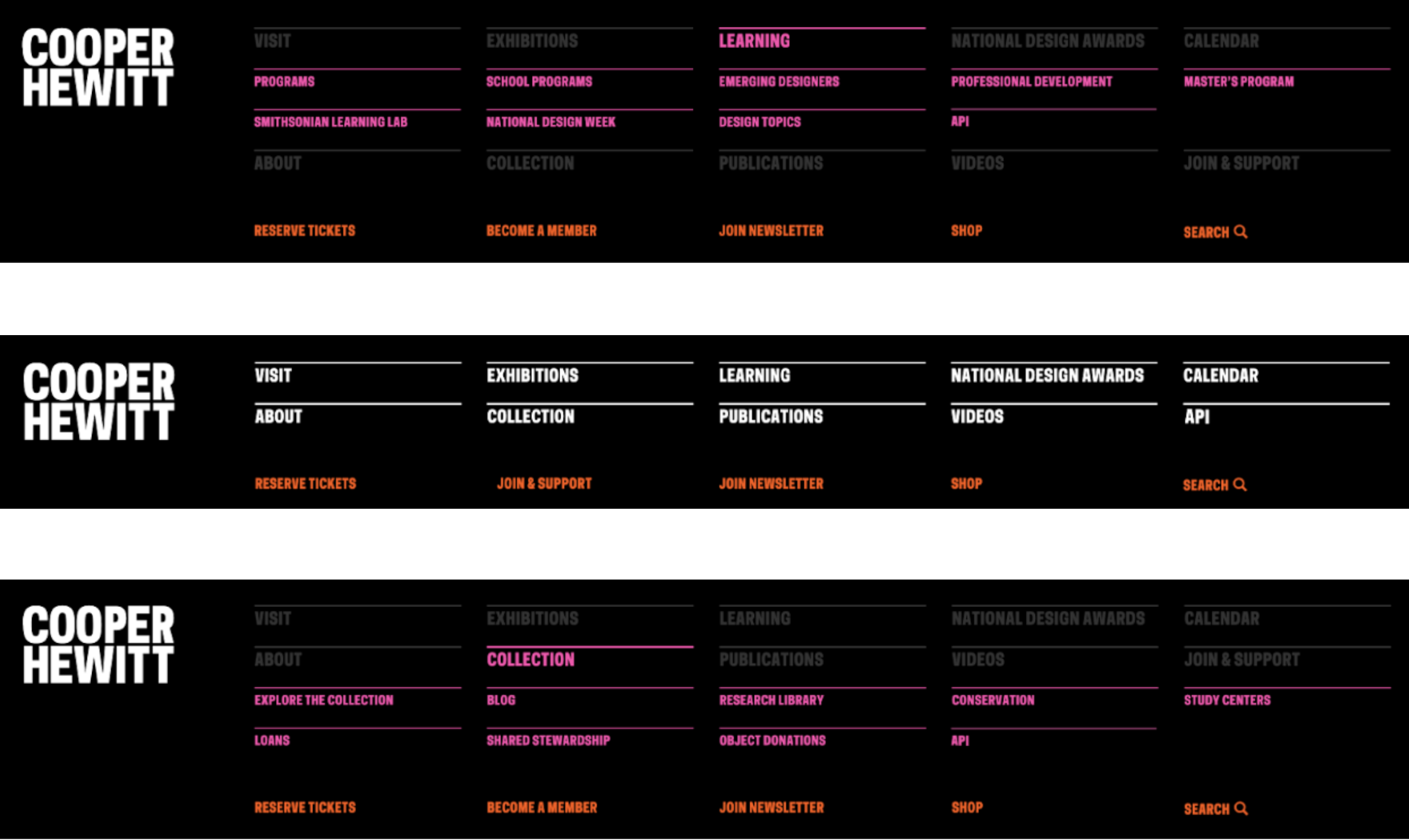

Recommendation 1: Include the API Page in the Navigation

First, we suggested that a link directly to the API be nested in the global navigation of the main site. This would increase SEO numbers and also provide novice users with a clearer inpoint to the documentation.

Recommendation 2: Provide Search Its Own Field

Recommendation 3: Add Images and Details on Search Results

We also noted that 4 out of 6 users ended up using the search function to navigate to the API. This led us to encourage Cooper Hewitt to standardize visuals and copy for the search results page, as 3 of those 4 users completely skipped over the API page result as it did not have images or a description.

Recommendation 4: Consolidate Projects

The new API page presented users with 5 separate sections, 3 of which included various kinds of helpful examples: the homepage, “The API”, and “Showcase.” All 6 of our participants found difficulty when looking for examples of how the API could be implemented, and we recommended that Cooper consolidate all of their examples onto one page to make finding them easier.

Recommendation 5: Feature Projects

Devloping on the previous recommednation, we specified that the new consolidated API page locate and feature the examples as the first section of content at the top of the page.

Recommendation 6: Add a Panel to the Editor

During our third task, running a query, all 6 users had trouble understanding how to do so. We observed that all of our participants spent a significant amount of time navigating back and forth between the code editor and the documentation trying to learn the syntax of the API.

To mitigate this, we suggested adding a third panel to the in-browser editor to incorporate helpful documentation right into the environment.

Recommendation 7: Provide a Video Tutorial

Our final recommendation was born out of suggestions directly named by our participatns. 3 out of 6 users explicitly named video tutorials as particularly helpful in learnig new technologies during our interviews. Our final suggestion was to incorporate such a video into the documentation to further support users’ learning effort.

Reflections

Upon completing the study, our team was able to pinpoint a few aspects we would do differently in hindsight:

More deliberate participant screening for the two user groups.

As mentioned earlier, digging deeper into potential benchmarks of ability might have given some cleaner deliniations between the user groups.

A more solid knowledge test to get more robust quantitative data on the success of the current site.

If we could have spent more time exploring users’ knowledge retention we could have had more opportunities to find patterns in learning gaps.

More expansive methods for testing other than eye-tracking.

While it was a good experience to become familiar with eye-tracking technology, this particular study could have benefitted from a more flexible and natural study setting. Making mockups of our recommendations and iterating on them has greater potential for a more speedy resolution.

Next Steps

Going forward, we’d like to make high-fidelity mockups of our solutions and test their efficacy in supporting users’ learning of the API.

Thank you!

Thanks for reading this far :) If you’d like to know more, or have any questions, please feel free to reach out via email